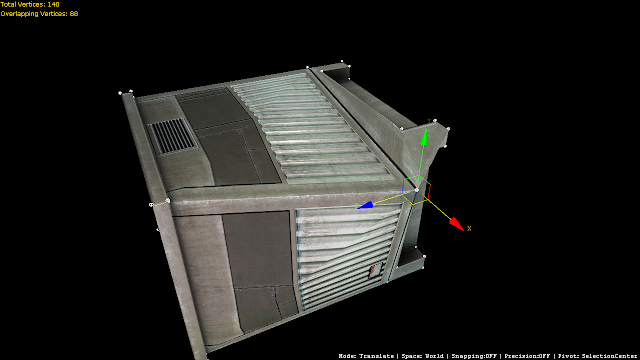

Editing models inside my engine has been something I wanted to support for a long time now. Especially when you're not the one creating the assets, but do have to work with them inside the editor it can be time consuming to send back a model with incorrect material names or a mesh that should be split to optimize rendering, or vice versa. With this tool I will eliminate the need to have artists re-export their models because of tiny mistakes/changes and should provide a much cleaner pipeline in general.

Currently only the very core of this feature has been implemented (as seen in the video) and a lot of other stuff is still to follow, I will give a brief overview of how the fully functional tool should work once its complete. When any new model (.fbx or .x) is imported, it's processed by the content pipeline and turned into an .xnb file, an .xml file will be attached to the source (model) file that contains all changes to the model and will be processed during build-time. By default this .xml file is empty and nothing altered, during run-time a mesh can be converted to Editable Mesh (trying to keep this similar to 3D Studio Max's naming conventions) which allows vertex and triangle selection with support for any type of transformation that is supported by the gizmo component (translate, rotate, scale in local- and world-space) these transformations are stored inside Actions (I will talk about Actions a lot more at a later time, but it's basically what you use to support undo/redo in any kind of editing software) and written to the model Xml. The model is then re-build the next time the project is build and the actions are applied, this can hold any type of alteration including material renaming, splitting meshes and deleting / adding new vertices alongside vertex transformations.

Because the source model remains untouched, whenever the model is re-exported from any DCC-tool like 3D Studio Max or Maya the model will still build with all transformations and alterations applied. An example: A material named '#46 Material' is renamed at run-time to 'corridor_floor', if the artist then changes the name of '#46 Material' to 'corridor_floor' or something else in the source model, the run-time change is ignored because the original material name (#46 Material) can no longer be found... in a worst-case scenario this ignores the material properties you stored for the original name (material properties are stored elsewhere, so no data is actually lost) so even in the worst case, you are only a small step away from re-applying your previous changes (A warning dialog with failed conversions should help with this, making it potentially very quick and painless to manually re-assign the changes that are never lost, just ignored till resolved) The same applies to vertex transformations, but these can be a bit more difficult to keep track of once the indices of a certain mesh change inside the .fbx source file.

There is still a significant amount of work that has to be completed before this feature takes it full shape, with this first proof of concept up and running I believe it will be a very valuable tool to have available, and a lot of fun to create :)

It proved to be quite easy to convert the gizmo to support vertex manipulation (and selection) I will add these changes to the codeplex project at some point in the future...

Sunday 26 June 2011

Wednesday 15 June 2011

Updated blog layout

I had plans to do this a while ago, but never got around actually doing it. Last night I kind of stumbled upon a new, wider template that still looked pretty similar to the old one. Most noticeable benefit is the extra space available for bigger images and videos and I'm pretty glad about how the new template turned out.

In lack of other news I dug up some screenshots of Project Sunburn.

Let me know what you think about this new layout in the comment section :)

In lack of other news I dug up some screenshots of Project Sunburn.

|

| An objective-based level from Project Sunburn |

|

| Enemy drone firing at the player in one of our test setups. |

Let me know what you think about this new layout in the comment section :)

Tuesday 14 June 2011

Environment Mapping (w/ Normal Maps)

A while ago I was trying to get Environment maps (aka Cube-maps) to work with SunBurn's Deferred renderer with reasonable success. Last week I had a go at it again, this time in my own renderer, because the normal-mapping information was already available inside the shader it was relatively easier to do this time around (along with all the problems I have already solved the first time)

It was supposed to be a transparent (forward)-effect for windows etc, but somehow turned into an opaque cube-mapped deferred shader...Not sure how that happened :)

Below you can see what the shader looks like at the moment, where the first two images show the 'forward' shader and the last two use the deferred shader (which includes pointlights, specularity etc.) The forward shader will be modified so it supports alpha-mapping, what it was originally designed for anyway.

The next step is to further develop the forward rendering pass, for transparency mostly. This includes depth-sorting of the objects along with Composite Lighting that calculates approximate lighting for objects that don't support deferred shading.

Resources:

Custom Model Effect XNA Sample - Source for the cube-map processor and the basics for the environment mapping shader.

Shawn Hargraves on Cube-mapping - Some interesting bits about cube-mapping, closely tied to the XNA sample above.

It was supposed to be a transparent (forward)-effect for windows etc, but somehow turned into an opaque cube-mapped deferred shader...Not sure how that happened :)

Below you can see what the shader looks like at the moment, where the first two images show the 'forward' shader and the last two use the deferred shader (which includes pointlights, specularity etc.) The forward shader will be modified so it supports alpha-mapping, what it was originally designed for anyway.

|

| Forward shader - environment map, with normal map and simple directional light. |

|

| Forward shader - similar setup as above. |

|

| The deferred shader - with pointlight on the left and right (with very strong colors to highlight the effect) |

|

| Deferred shader - with the deferred rendertargets (LoR: color, normals,depth,lighting) |

The next step is to further develop the forward rendering pass, for transparency mostly. This includes depth-sorting of the objects along with Composite Lighting that calculates approximate lighting for objects that don't support deferred shading.

Resources:

Custom Model Effect XNA Sample - Source for the cube-map processor and the basics for the environment mapping shader.

Shawn Hargraves on Cube-mapping - Some interesting bits about cube-mapping, closely tied to the XNA sample above.

Sunday 5 June 2011

A new (Deferred) Renderer [Part 1]

For a long time I have used SunBurn as my primary rendering solution. This helped a great deal while developing Needle Juice, Over Night and more recently: Project Sunburn. With no new game projects lined up with Core Engine, I have decided to experiment with my own rendering solution, based on Catalin’s Deferred Rendering sample along with information from many other sources including a Killzone 2 presentation from 2007.

The above images shows the most recent unit-test of filling the hardware DepthBuffer with the Gbuffer-depth to draw post-deferred geometry (i.e. using forward rendering for transparent surfaces) and other visuals such as vertex-lines. This is done during the final combining pass of the geometry data and lighting using the ‘DEPTH’ semantic. This fills the depth-buffer as all depth is lost after switching RenderTargets (something that can be prevented on the PC using PreserverContents, but does not work on the Xbox - this solves the problem entirely)

Some other experiments include texture projection as seen below.

Texture projection has several great uses, including Deferred Decals, light shapes and video projection on the scene's geometry (as seen in Splinter Cell: Conviction) Back while developing Over Night we used shadow-mapping in places where texture projection could have been much cheaper. A good example of this is the blue light coming from outside through the shaded window, covering most of the room as seen below. The second image uses a texture to simulate a similar effect, without having to re-draw the entire shadowmap every frame, instead simply sampling from the texture and projecting it into screen-space.

Looking back at Needle Juice, where we used a directional light with shadows to draw light coming through the windows, another place where texture projection would have been a cheaper and more elegant solution to our problem (also preventing light-leaking, which is very common with directional lighting)

|

| Needle Juice: directional-light coming through the window, required shadow-mapping. |

I will continue working on the renderer for the time to come, with such a wealth of presentations, papers and samples on deferred rendering (and graphics programming in general) it will keep me busy for a while…Until next time! :)

Subscribe to:

Posts (Atom)